SPATIAL RESOLUTION

Two important considerations when acquiring digital images are the spatial resolution (i.e. how many pixels make up the image), and the intensity resolution (i.e. how many shades of grey can be represented by each pixel).

The spatial resolution of an image determines the size of the smallest object which can be recorded in an image. As the number of pixels in the image increases, the size of the piece of retinal represented by each pixel decreases.

However, the number of pixels is not the only factor which limits resolution. The optics of the human eye prevents objects smaller than 8µm from being visible. There is no point using pixels much smaller than this (4µm pixels would be a conservative lower limit on size) ; an excessive number of pixels requires more disk storage, takes longer to transfer over a network, is more difficult to display, and increases the time required for any automated analysis.

Furthermore, increasing the resolution may also increase the random “noise” seen on the image, since the limited amount of light has to be shared between more pixels.

Some cameras and scanners use a process known as interpolation to increase the apparent number of pixels in the image; this should be avoided since it increases the storage requirements without including any extra true information in the image.

Note that if images are reduced in size (zoomed) to fit the display size, the effective spatial resolution will be reduced. To get the benefit of the full resolution of the original image, the image must be displayed 1:1 (pixel for pixel) which often means that the entire image cannot be viewed at once.

EXAMPLE 1: A 45° fundus image represents an arc of approximately 8600µm on the retina. If a digital camera which stores images as 1024×1024 pixels is used to acquire photographs each pixel will represent 8600 / 1024 = 8.4×8.4µm on the retina. If the image is monochrome with a single byte (8 bits, providing 256 grey levels) used to represent each pixel the raw image data would be 1 megabyte (MB) in size (a megabyte is 1024 kilobytes; a kilobyte is 1024 bytes, therefore a megabyte is 1048576 bytes). If the image were colour with a single byte per colour channel then the raw image data would be three times larger, i.e. 3 MB in size.

EXAMPLE 2: A Canon D30 stores colour images as 2160×1440 pixels. Without any compression these images will require 8.9 MB of disk storage each.

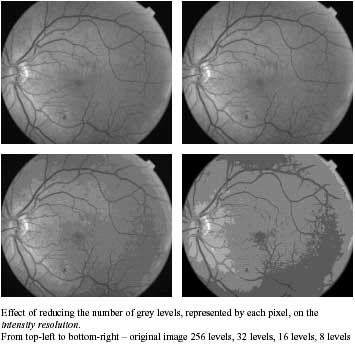

INTENSITY RESOLUTION

The way in which numbers are stored on the computer mean that the number of shades of grey (grey levels) that each pixel can represent is normally a power of two (e.g. 64, 256, 1024, 4096 etc.). Typically digital images have 256 grey levels, though the Nikon D1 for instance can store monochrome images with 4096 levels. The following figure shows the effect of reducing the number of grey levels represented by each pixel.

DYNAMIC RANGE

The number of grey levels also determines the maximum dynamic range of the image. In general film has a wider dynamic range than a digital camera, and is therefore more forgiving of an incorrect exposure setting. On the other hand, a poor digital image is seen immediately and can be repeated using a different flash power to obtain a good image.

Where possible digital images should be exposed so that the resulting image uses almost the whole range of grey levels from black to white, taking care not to saturate the image in the bright areas. Underexposed images can be brightened up afterwards but they will be of lower quality, with fewer graduations of grey and more noise in the image.

ISO Speed Index

The sensitivity of traditional film is described using an ISO speed index. Although digital cameras do not use film the sensitivity of the detector is commonly described using an equivalent ISO value. As with traditional film, the higher the number, the more sensitive the detector is. Digital cameras often allow the sensitivity to be adjusted; increasing the ISO setting would allow lower flash power to be used. However, this is not good in practice as increasing the ISO setting greatly increases the level of noise in the image.

Grey Level Display

Note that even if an image is stored using 256 grey levels the visual appearance will depend on how many grey levels the display device, whether a monitor or a printer, can display. For instance, a PC display system configured in “high colour” mode can display 65,536 different colours, but only 32 of these colours are shades of grey. Therefore, even for viewing black and white images colour displays should be set to “true colour” (also known as 24-bit or 32-bit colour) for best results.

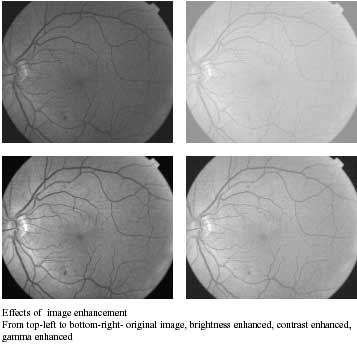

Image Enhancement

Images may require enhancement to accommodate poor quality images or particular display characteristics. It is important to save altered images separately rather than overwriting the original image. The following are simple intensity related enhancements which can often be applied to the images:

- contrast and brightness manipulation – when the image appears too bright or too dark, or if further contrast is required.

- zooming – when a more magnified image is required, however resolution may be reduced.

- green filter (red-free) – this is sometimes used to increase the contrast.

BRIGHTNESS

Brightness adjustments are analogous to the brightness control on a television set. A constant value is added to each pixel in the image. For instance, if the brightness is increased by 10 levels then all the pixels which were previously black (value 0) will have a value of 10, all pixels of value 1 will have value 11, etc.

CONTRAST

Contrast adjustments are analogous to the contrast control on a television set. Each pixel is multiplied by a constant value. For instance, if the contrast is increased by a factor of 2, all the pixels with value 1 will have a value of 2, and all the pixels with value 2 will have a value of 4. The increase in the difference between pixel values is what is perceived as an increase in contrast.

GAMMA

Gamma adjustments (also known as gamma correction) are a power-law transform which are necessary since capture devices such as cameras, as well as the human eye, do not perceive an object which emits twice as much light energy as another as being twice as bright. Often gamma correction is applied automatically by capture and display devices.

GREYLEVEL STRETCHING

Captured images often do not use the entire range of possible grey levels. Such images appear very dark and probably lacking in contrast. Greylevel stretching (sometimes known as histogram stretching) adjusts the grey levels in the image so that they cover the full range of levels, brightening the image and increasing the contrast.

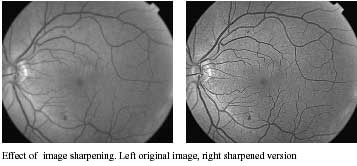

SHARPENING

Some cameras and software allow images to be sharpened. While sharpening can make images visually more appealing, it can also introduce artefacts and greatly increase image noise, which could mislead manual and automated interpretation of the image. It should therefore be used with care. The minimum of sharpening should be applied to the acquired images, which can be sharpened later for viewing if necessary.

NOISE REDUCTION /SMOOTHING

Noise reduction is really the opposite of sharpening as it smooths or blurs the image. It should not be necessary for well exposed images.

WHITE BALANCE

Image colour depends on both the colour of the object being photographed and on the colour of the illumination source (often described as the colour temperature of the light). Most digital cameras, like traditional film, assume natural daylight illumination; pictures taken indoors with tungsten lamp illumination have a yellow/orange tint because tungsten light is much yellower than daylight. Digital cameras usually have a setting to compensate for this known as the white balance. For fundus images it should be set to the flash setting or daylight.